> [TOC]

# 集群环境搭建

在lab1、lab2和lab3三台机器上搭建集群环境,包括hadoop、zookeeper、kafka和hbase。

# 1、Java

1. 上传Java安装包至lab1的/opt/modules/目录并解压

```shell

tar -zxf jdk-8u301-linux-x64.tar.gz

mv jdk-8u301-linux-x64 java

```

2. 在/etc/profiel文件中添加如下环境变量

```shell

export JAVA_HOME=/opt/modules/java

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib/rt.jar

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/opt/modules/hadoop-3.1.3

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HBASE_HOME=/opt/modules/hbase-2.2.6

export PATH=$PATH:$HBASE_HOME/bin

export KAFKA_HOME=/opt/modules/kafka_2.11-2.1.1

export PATH=$PATH:$KAFKA_HOME/bin

```

3. 使环境变量生效

```shell

source /etc/profile

```

# 2、hadoop集群环境搭建

hadoop集群规划:

| | lab1 | lab2 | lab3 |

| ---- | ----------------- | --------------------------- | -------------------------- |

| HDFS | NameNode DataNode | DataNode | SecondaryNameNode DataNode |

| YARN | NodeManager | ResourceManager NodeManager | NodeManager |

1. 上传hadoop安装包至lab1的/opt/modules/目录并解压

```shell

tar -zxf hadoop-3.1.3.tar.gz

```

2. 进入/opt/modules/hadoop-3.1.3/etc/hadoop目录,配置core-site.xml、hdfs-site.xml、yarn-site.xml以及mapred-site.xml四个文件。

```shell

cd etc/hadoop

```

```shell

vi core-site.xml

# 添加如下配置

fs.defaultFS

hdfs://lab1:8020

hadoop.tmp.dir

/opt/modules/hadoop-3.1.3/data

hadoop.http.staticuser.user

root

```

```shell

vi hdfs-site.xml

# 添加如下配置

dfs.namenode.http-address

lab1:9870

dfs.namenode.secondary.http-address

lab3:9868

```

```shell

vi yarn-site.xml

# 添加如下配置

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

lab2

yarn.nodemanager.env-whitelist

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

```

```shel

vi mapred-site.xml

# 添加如下配置

mapreduce.framework.name

yarn

```

```shell

vi hadoop-env.sh

# 添加java环境变量

export JAVA_HOME=/opt/modules/java

# 添加以下配置

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

```

```shell

vi workers

# 添加以下配置

lab1

lab2

lab3

```

3. 上传hadoop安装包至lab2、lab3的/opt/modules/目录并解压

```shell

tar -zxf hadoop-3.1.3.tar.gz

```

4. 用上面修改过的文件分别替换lab2、lab3相应位置的文件

5. 如果是第一次启动集群,需要在lab1格式化namenode

```shell

hdfs namenode -format

```

6. 进入lab1的/opt/modules/hadoop-3.1.3/sbin目录,启动HDFS

```shell

./start-dfs.sh

```

jps查看相关进程

lab1

```shell

DataNode

NameNode

```

lab2

```shell

DataNode

```

lab3

```shell

SecondaryNameNode

DataNode

```

7. 进入lab2的/opt/modules/hadoop-3.1.3/sbin目录,启动YARN

```shell

./start-yarn.sh

```

lab1

```shell

NodeManager

```

lab2

```shell

ResourceManager

NodeManager

```

lab3

```shell

NodeManager

```

8. 在浏览器查看http://lab1:9870和http://lab2:8088查看HDFS的NameNode和YARN的ResourceManager

# 3、zookeeper集群环境搭建

1. 上传zookeeper安装包至lab1的/opt/modules/目录并解压

```shell

tar -zxf zookeeper-3.4.14.tar.gz

```

2. 进入/opt/modules/zookeeper-3.4.14/conf/目录,修改zoo.cfg文件

```shell

mv zoo_sample.cfg zoo.cfg

vi zoo.cfg

# 修改如下内容

dataDir=/opt/modules/zookeeper-3.4.14/data/zData

# 添加如下内容

server.1=lab1:2888:3888

server.2=lab2:2888:3888

server.3=lab3:2888:3888

```

3. 进入/opt/modules/zookeeper-3.4.14/data/zData/目录,创建myid文件

```shell

touch myid

echo 1 >> data/zData/myid

```

4. 上传zookeeper安装包至lab2、lab3的/opt/modules/目录并解压

```shell

tar -zxf hadoop-3.1.3.tar.gz

```

5. 用上面修改过的文件分别替换lab2、lab3相应位置的文件

6. 修改lab2、lab3的/opt/modules/zookeeper-3.4.14/data/zData/myid,分别将1改为2、3

7. 在三台机器的/opt/modules/zookeeper-3.4.14/bin目录下启动zookeeper

```shell

./zkServer.sh start

```

查看状态

```shell

./zkServer.sh status

```

8. 使用jps查看相关进程

lab1:

```

QuorumPeerMain

```

lab2:

```

QuorumPeerMain

```

lab3:

```

QuorumPeerMain

```

# 4、kafka集群环境安装

1. 上传kafka安装包至lab1的/opt/modules/目录并解压

```shell

tar -zxf kafka_2.11-2.1.1.tgz

```

2. 进入/opt/modules/kafka_2.11-2.1.1/config/目录,修改server.properties、producer.properties、consumer.properties、kafka-run-class.sh、kafka-server-start.sh文件

```shell

vi server.properties

# 修改如下配置

broker.id=0(当前broker的编号)

listeners=PLAINTEXT://lab1:9092(当前broker的ip)

zookeeper.connect=lab1:2181,lab2:2181,lab3:2181

# 增加如下配置

delete.topic.enable=true

```

```shell

vi producer.properties

# 修改如下配置

bootstrap.servers=lab1:9092,lab2:9092,lab3:9092

```

```shell

vi consumer.properties

# 修改如下配置

bootstrap.servers=lab1:9092,lab2:9092,lab3:9092

```

```shell

vi kafka-run-class.sh

# 修改如下配置

KAFKA_JMX_OPTS="

-Dcom.sun.management.jmxremote=true

-Dcom.sun.management.jmxremote.authenticate=false

-Dcom.sun.management.jmxremote.ssl=false

-Djava.rmi.server.hostname=服务器的IP地址或者域名

-Dsun.rmi.transport.tcp.responseTimeout=60000 #超时时间

-Dcom.sun.management.jmxremote.rmi.port=9999 #端口

-Dcom.sun.management.jmxremote.port=9999 #端口

-Dcom.sun.management.jmxremote.local.only=false"

```

```shell

vi kafka-server-start.sh

# 增加export JMX_PORT="9999"

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

export JMX_PORT="9999"

fi

```

3. 上传kafka安装包至lab2、lab3的/opt/modules/目录并解压

```shell

tar -zxf kafka_2.11-2.1.1.tgz

```

4. 用上面修改过的文件分别替换lab2、lab3相应位置的文件

5. 修改lab2、lab3的/opt/modules/kafka_2.11-2.1.1/server.properties,分别改为

lab2:

```shell

vi server.properties

# 修改如下配置

broker.id=1(当前broker的编号)

listeners=PLAINTEXT://lab2:9092(当前broker的ip)

advertised.listeners=PLAINTEXT://当前服务器的wip:9092

zookeeper.connect=lab1:2181,lab2:2181,lab3:2181

# 增加如下配置

delete.topic.enable=true

```

lab3:

```shell

vi server.properties

# 修改如下配置

broker.id=2(当前broker的编号)

listeners=PLAINTEXT://lab3:9092(当前broker的ip)

zookeeper.connect=lab1:2181,lab2:2181,lab3:2181

# 增加如下配置

delete.topic.enable=true

```

6. 在三台机器的/opt/modules/kafka_2.11-2.1.1/bin目录下启动kafka

```shell

kafka-server-start.sh -daemon ../config/server.properties

```

7. 使用jps查看相关进程

lab1:

```shell

Kafka

```

lab2:

```shell

Kafka

```

lab3:

```shell

Kafka

```

# 5、Hbase集群环境搭建

1. 上传Hbase安装包至lab1的/opt/modules/目录并解压

```shell

tar -zxf hbase-2.2.6-bin.tar.gz

```

2. 进入/opt/modules/hbase-2.2.6/conf目录下,修改hbase-env.sh、hbase-site.xml以及regionservers文件

```shell

vi hbase-env.sh

# 修改如下配置

export JAVA_HOME=/opt/modules/java

export HBASE_MANAGES_ZK=false

```

```shell

vi hbase-site.xml

# 增加如下配置

hbase.rootdir

hdfs://lab1:8020/hbase

端口要和Hadoop的fs.defaultFS端口一致 -->

hbase.cluster.distributed

true

hbase.zookeeper.quorum

lab1:2181,lab2:2181,lab3:2181

hbase.tmp.dir

file:/opt/modules/hbase-2.1.6/tmp

```

```shell

vi regionservers

# 增加如下配置

lab1

lab2

lab3

```

3. 在lab1的/opt/modules/hbase-2.2.6/bin/下,启动hbase

```shell

./start-hbase.sh

```

4. 使用jps查看相关进程

lab1:

```shell

HMaster

HRegionServer

```

lab2:

```shell

HRegionServer

```

lab3:

```shell

HRegionServer

```

# 6、solr的安装与使用

***

## 6.1、solr的安装---(三台机器都需要安装solr)

````shell

# 1.将安装包放入/opt/modules目录下

solr.zip

# 2.解压缩

unzip solr.zip

# 3.创建用户

sudo useradd solr

echo solr | sudo passwd --stdin solr

# 4.修改 solr 目录的所有者为 solr 用户

sudo chown -R solr:solr /opt/modules/solr

# 5.修改/opt/modules/solr/bin/solr.in.sh 文件中的以下属性

vim /opt/modules/solr/bin/solr.in.sh

ZK_HOST="lab1:2181,lab2:2181,lab3:2181"

SOLR_HOST="自己的ip"

````

## 6.2、启动集群

```shell

# 1.启动solr

ssh lab1 sudo -i -u solr /opt/modules/solr/bin/solr start # 关闭为stop

ssh lab2 sudo -i -u solr /opt/modules/solr/bin/solr start

ssh lab3 sudo -i -u solr /opt/modules/solr/bin/solr start

sudo -i -u solr /opt/modules/solr/bin/solr start -c -p 8983 -z 150.158.138.99:2181,49.235.67.21:2181,42.192.195.253:2181 -force

# 强制启动

./solr start -c -p 8983 -z lab1:2181,lab2:2181,lab3:2181 -force

# 2.创建collection---在一个节点上面运行即可

sudo -i -u solr /opt/modules/solr/bin/solr create -c vertex_index -d /opt/modules/atlas-2.2.0/conf/solr -shards 3 -replicationFactor 1

sudo -i -u solr /opt/modules/solr/bin/solr create -c edge_index -d /opt/modules/atlas-2.2.0/conf/solr -shards 3 -replicationFactor 1

sudo -i -u solr /opt/modules/solr/bin/solr create -c fulltext_index -d /opt/modules/atlas-2.2.0/conf/solr -shards 3 -replicationFactor 1

# 3.删除collection

curl "http://127.0.0.1:8983/solr/admin/collections?action=DELETE&name=edge_index"

curl "http://127.0.0.1:8983/solr/admin/collections?action=DELETE&name=vertex_index"

curl "http://127.0.0.1:8983/solr/admin/collections?action=DELETE&name=fulltext_index"

```

# 7、atlas的安装---(单节点,这里选择二号虚拟机)

***

## 7.1、atlas安装

````shell

# 1.将安装包放入/opt/modules目录下

atlas-2.2.0.zip

# 2.解压缩

unzip atlas-2.2.0.zip

# 3.修改atlas的配置文件

vim /opt/modules/atlas-2.2.0/conf/atlas-application.properties

# 将其中的lab1,lab2,lab3全部更换为自己的ip,并修改这一段

atlas.rest.address=http://lab2:21000 # 选择几号机器,就写那个IP

# 4.删除原来的hbase的安装包

rm -rf /opt/modules/hbase-2.2.6/lib/commons-configuration-1.6.jar

# 5.移动高版本的安装包

mv commons-configuration-1.10.jar /opt/modules/hbase-2.2.6/lib/commons-configuration-1.10.jar

````

## 7.2、atlas集成Hbase

````shell

# 修改 hbase-site.xml文件,加入这一行

hbase.coprocessor.master.classes

org.apache.atlas.hbase.hook.HBaseAtlasCoprocessor

# 拷贝atlas配置文件到hbase的conf中

cp atlas-application.properties /opt/modules/hbase-2.2.6/conf/

# 链接atlas钩子到hbase

ln -s /hook/hbase/* /lib/

# 检查atlas-env.sh文件配置是否有hbase路径

export HBASE_CONF_DIR=/opt/modules/hbase-2.2.6/conf

# 最后执行钩子程序

./import-hbase.sh

````

## 7.3、atlas集成hive

```shell

# 修改 hive-site.xml 文件,加入这一行

hive.exec.post.hooks

org.apache.atlas.hive.hook.HiveHook

# 拷贝atlas配置文件到hbase的conf中

cp atlas-application.properties /opt/modules/hive-3.1.2/conf

# 检查atlas-env.sh文件配置是否有hbase路径

export HBASE_CONF_DIR=/opt/modules/hbase-2.2.6/conf

# Add 'export HIVE_AUX_JARS_PATH=/hook/hive' in hive-env.sh of your hive configuration

export HIVE_AUX_JARS_PATH=/opt/modules/atlas-2.2.0/hook/hive

# 最后执行钩子程序

./import-h.sh

```

# 8、Altas数据恢复

`Altas`数据恢复涉及`solr`以及两张`hbase`表——`apache_atlas_entity_audit`、`apache_atlas_janus`。

## 8.1、`hbase`表数据导出和恢复

1. 在`hdfs`中新建文件夹

```shell

hadoop fs -mkdir /tmp/atlas_data

```

2. 将这两张表的数据导出到`hdfs`中

```shell

hbase org.apache.hadoop.hbase.mapreduce.Export apache_atlas_entity_audit hdfs://lab1:8020/tmp/hbase/atlas_data/apache_atlas_entity_audit

hbase org.apache.hadoop.hbase.mapreduce.Export apache_atlas_janus hdfs://lab1:8020/tmp/hbase/atlas_data/apache_atlas_janus

```

3. 新建本地文件夹`altas_data`,将导出的文件保存到该文件夹内

```shell

hadoop fs -get /tmp/hbase/atlas_data/apache_atlas_entity_audit ./

hadoop fs -get /tmp/hbase/atlas_data/apache_atlas_janus ./

```

4. 将这两份文件上传至目标机器的`hdfs`中

```shell

# 将上述的两个文件夹,放入lab2j

hdfs dfs -put ./atlas_data/ hdfs://lab1:8020/tmp/

```

5. 查看`hbase`表结构并在目标机器中创建这两张表,注意需要删除原表结构中的`TTL => 'FOREVER'`

```shell

# 1.创建apache_atlas_janus表

create 'apache_atlas_janus', {NAME => 'e', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false',KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}, {NAME => 'f', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} ,{NAME => 'g', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false',KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'},{NAME => 'h', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false',KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} ,{NAME => 'i', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}, {NAME => 'l', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}, {NAME => 'm', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false',KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false',CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}, {NAME => 's', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false',KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'GZ', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}, {NAME => 't', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

# 2.创建apache_atlas_entity_audit

create 'apache_atlas_entity_audit', {NAME => 'dt', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false',NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'FAST_DIFF', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE => 'false',PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'GZ', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

```

6. 将导出的数据导入到新建的`hbase`表中

```shell

hbase org.apache.hadoop.hbase.mapreduce.Import apache_atlas_entity_audit hdfs://lab1:8020/tmp/atlas_data/apache_atlas_entity_audit

hbase org.apache.hadoop.hbase.mapreduce.Import apache_atlas_janus hdfs://lab1:8020/tmp/atlas_data/apache_atlas_janus

```

## 8.2、solr数据的恢复--- (单节点执行,这里选择lab2,即上面atlas的虚拟机)

```shell

# 0.

mkdir -p /opt/modules/solr/backup

# 移动备份文件到这个文件夹

mv ./* /opt/modules/solr/backup/

# 给solr权限

chown -R solr:solr /opt/modules/solr/backup

# 1.创建备份,一下操作皆为solr用户

su solr

curl 'http://127.0.0.1:8983/solr/fulltext_index/replication?command=backup&location=/opt/modules/solr/backup&name=fulltext_index.bak'

curl 'http://127.0.0.1:8983/solr/vertex_index/replication?command=backup&location=/opt/modules/solr/backup&name=vertex_index.bak'

curl 'http://127.0.0.1:8983/solr/edge_index/replication?command=backup&location=/opt/modules/solr/backup&name=edge_index.bak'

# 2.恢复备份,将备份拷贝到/opt/modules/solr/backup目录下

curl 'http://127.0.0.1:8983/solr/fulltext_index/replication?command=restore&location=/opt/modules/solr/backup&name=fulltext_index.bak'

curl 'http://127.0.0.1:8983/solr/vertex_index/replication?command=restore&location=/opt/modules/solr/backup&name=vertex_index.bak'

curl 'http://127.0.0.1:8983/solr/edge_index/replication?command=restore&location=/opt/modules/solr/backup&name=edge_index.bak'

# 3.查看备份细节

curl "http://localhost:8983/solr/fulltext_index/replication?command=details"

```

## 8.3、启动atlas

````shell

# 进入atlas安装目录执行

bin/atlas_start.py

# 稍等10-20分钟访问

http://lab2:21000

````

# 9、目前遇见的问题与解决方案

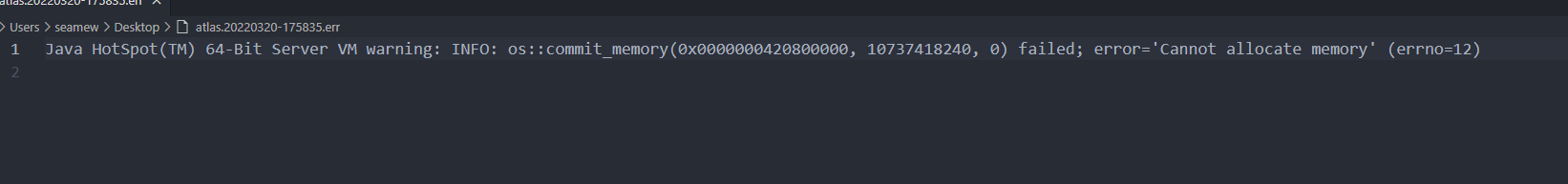

## 9.1、atlas启动报错

```shell

# 猜测可能是申请内存过大导致的

# 修改linux系统配置,让他允许申请大内存

#释放内存

echo 3 > /proc/sys/vm/drop_caches

# 解决方式:

echo 1 > /proc/sys/vm/overcommit_memory

# 此方式临时生效,系统重启后消失

# 编辑/etc/sysctl.conf ,添加vm.overcommit_memory=1,然后sysctl -p 使配置文件永久生效

# 当然这是我们在开发环境下的解决方式, 在生产环境还是要尽量去优化调整JVM的参数来保证每个程序都有足够的内存来保证运行

```

# 附录

| 软件 | 版本 |

| --------- | ---------- |

| java | 1.8 |

| hadoop | 3.1.3 |

| zookeeper | 3.4.14 |

| kafka | 2.11-2.1.1 |

| Hbase | 2.2.6 |

| solr | 7.7.3 |

| atlas | 2.2.0 |